For my final project in Learning Machines, I forced a deep learning machine to watch every episode of The X-Files.

Watching every episode of The X-Files in high school on Netflix DVDs that came in the mail (remember those?) seemed like the thing to do. It was a great show, with 9 seasons of 20+ episodes a piece. So, it only seemed fair to provide a robot friend with the same experience.

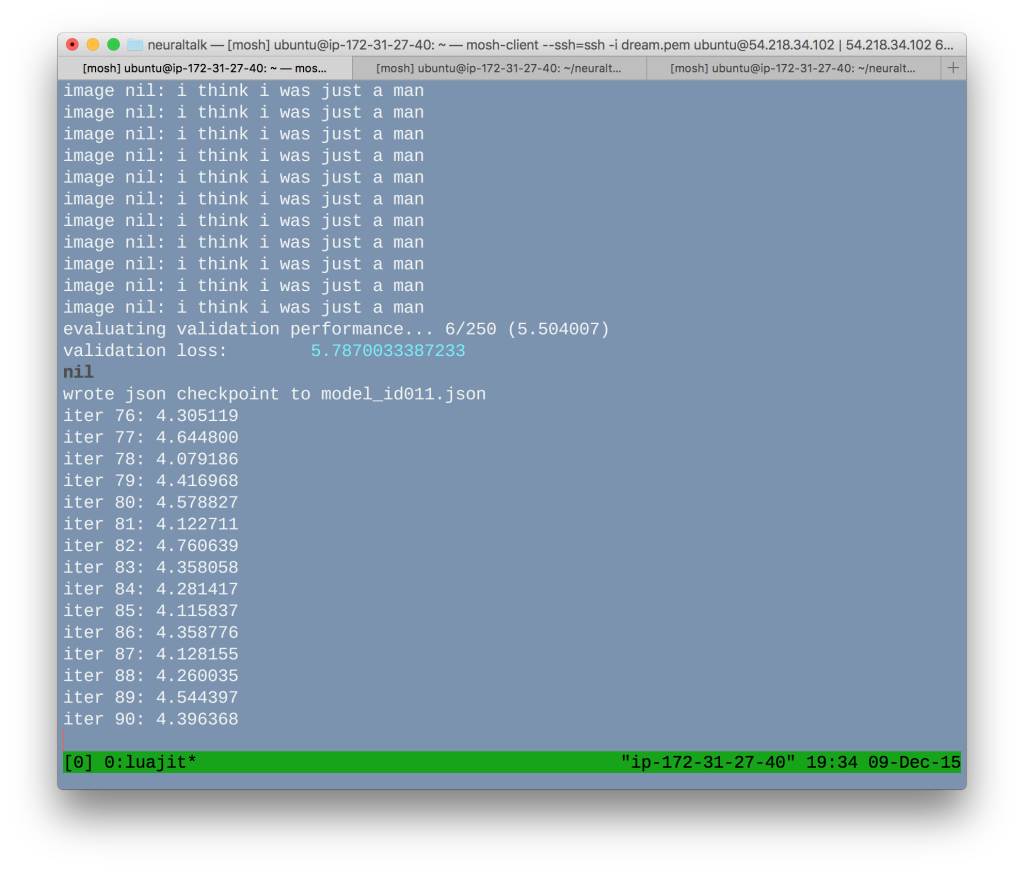

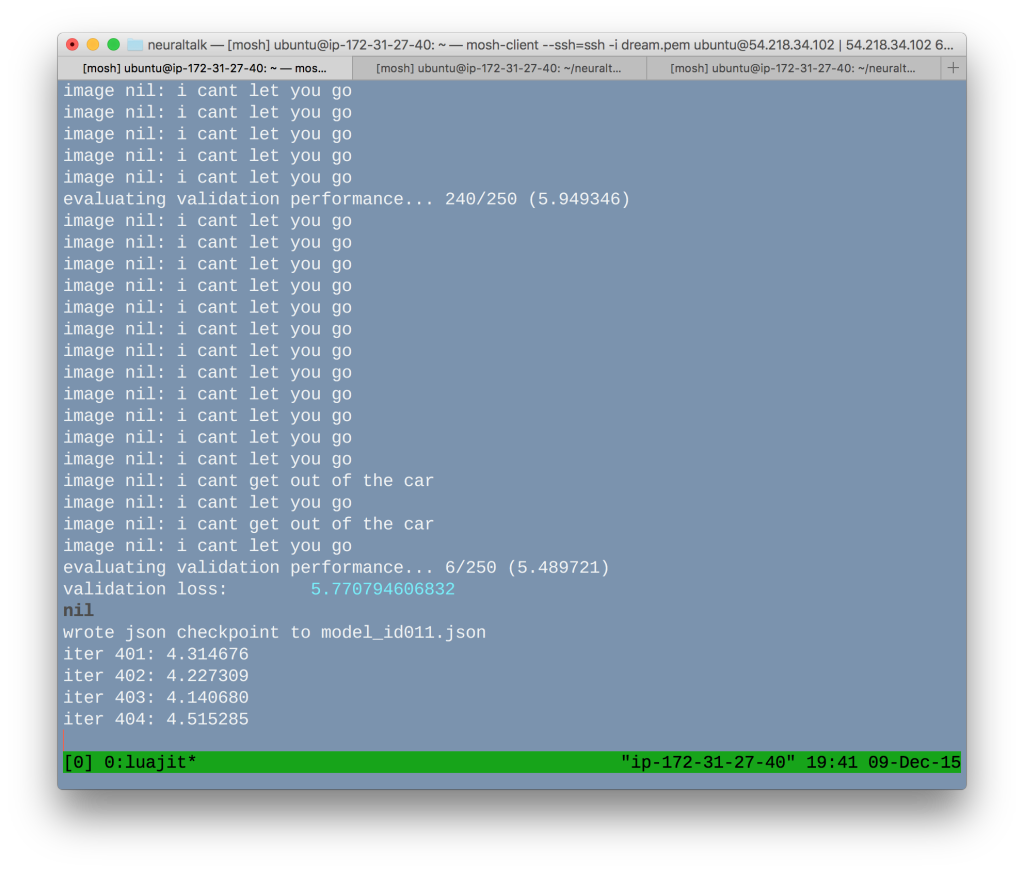

I’m currently running NeuralTalk2, which is truly wonderful open source image captioning code consisting of convolutional and recurrent neural networks. The software requires a GPU to train models, so I’m running it on an Amazon Web Services GPU server instance. At ~50 cents per hour, it’s a lot more expensive than Netflix.

Andrej Karpathy wrote NeuralTalk2 in Torch, which is based in Lua, and it requires a lot of dependencies. However, it was a lot easier to set up than the Deep Dream code I experimented with over the summer.

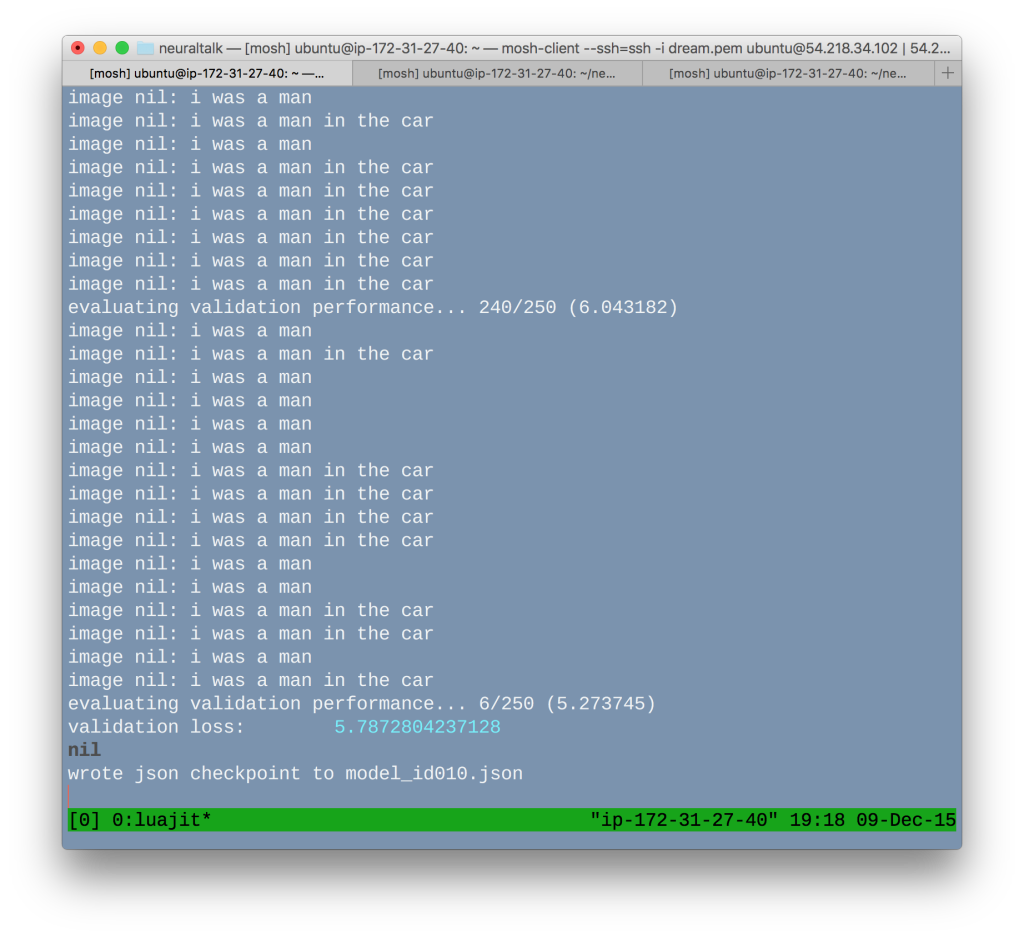

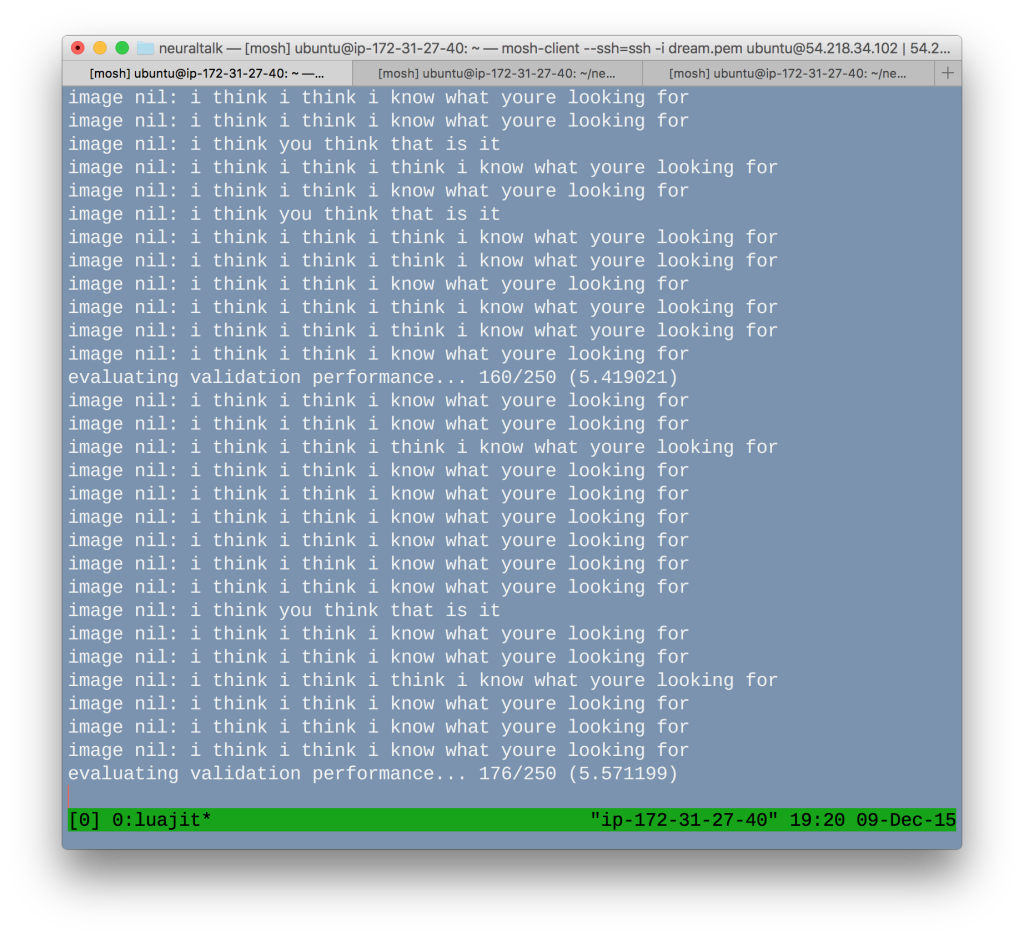

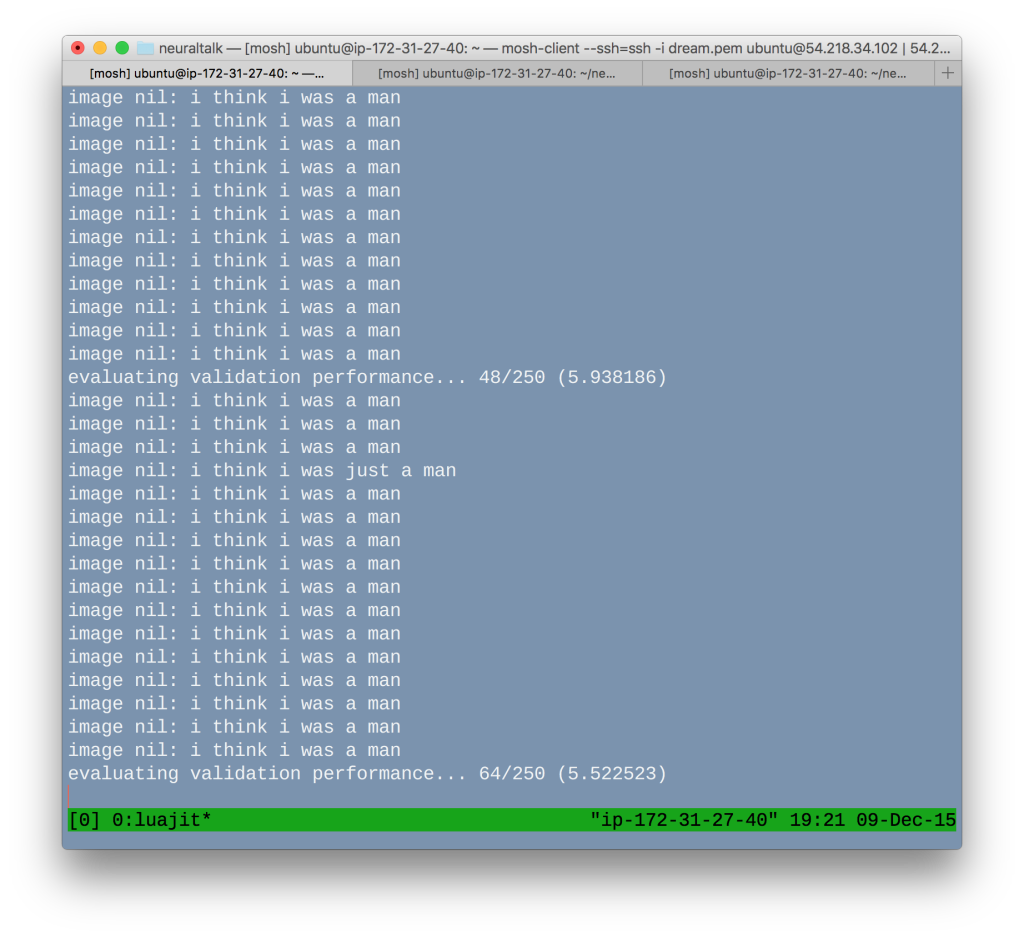

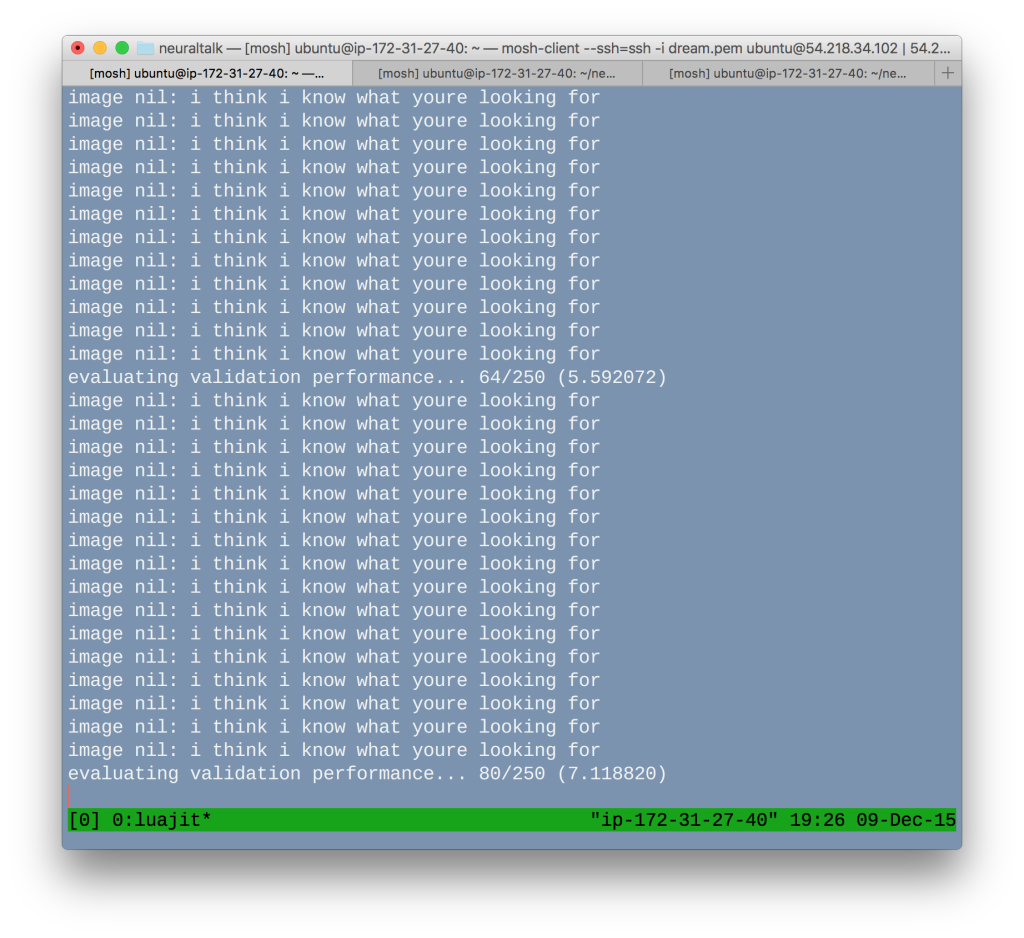

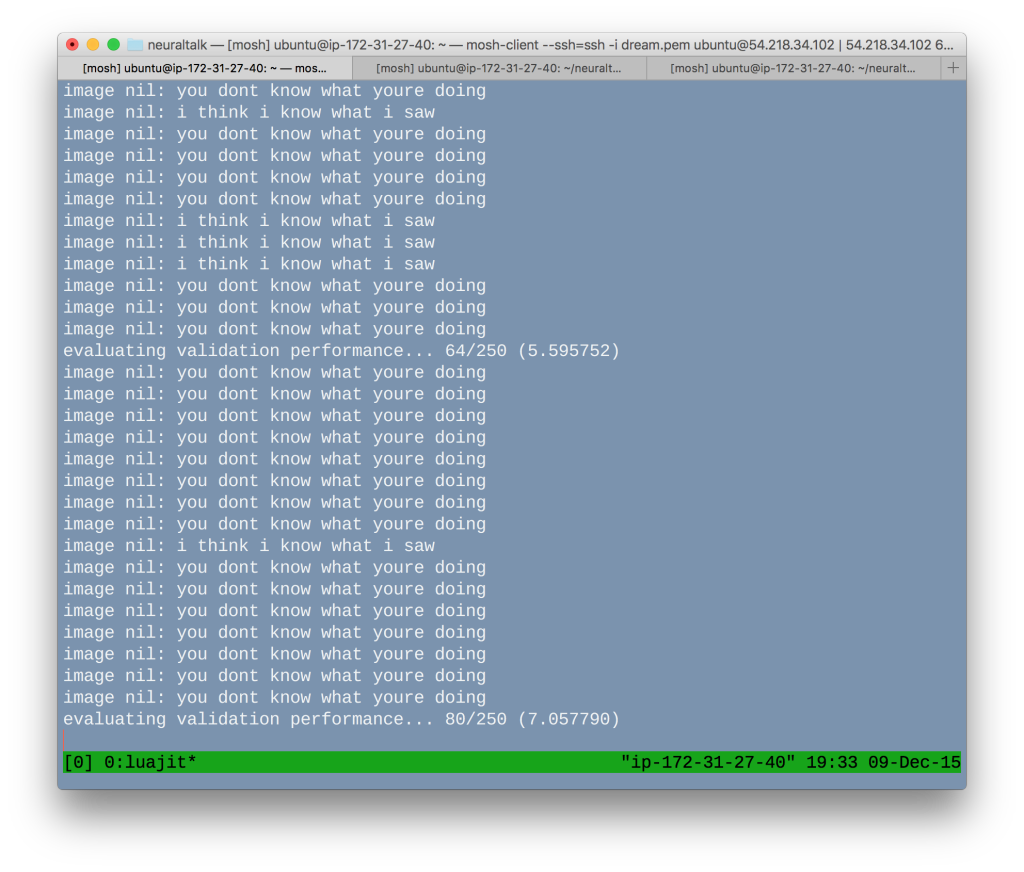

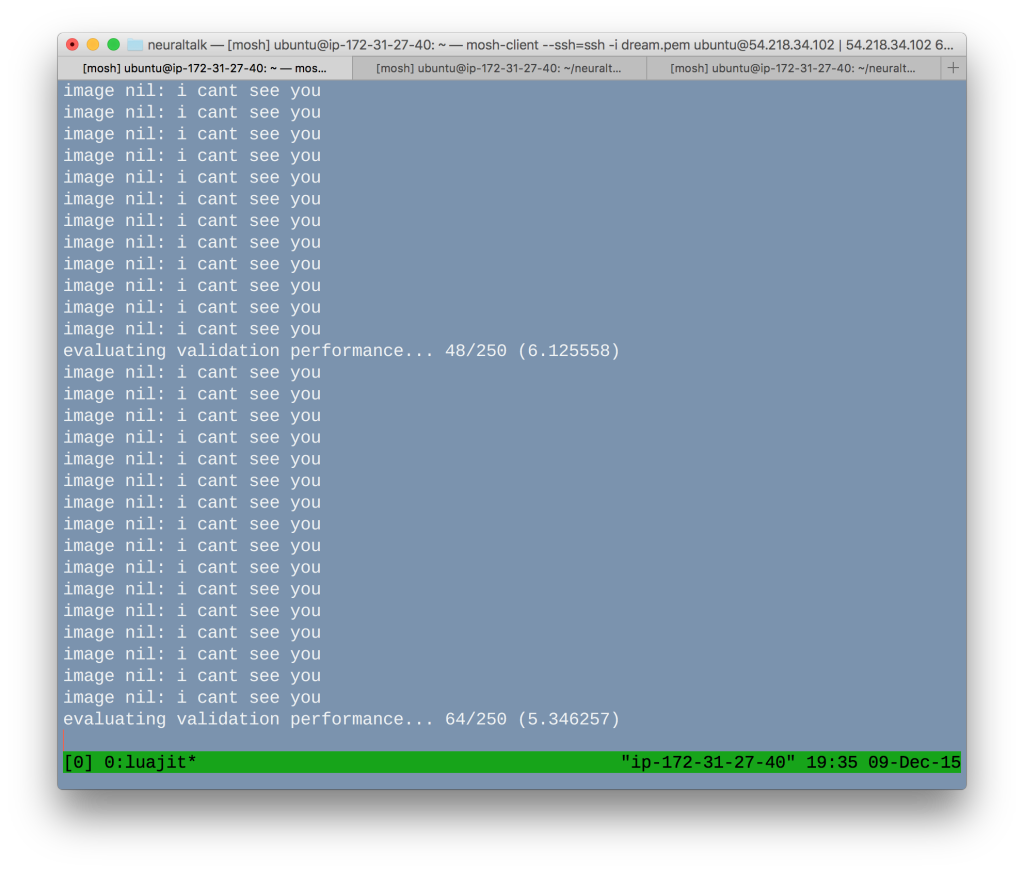

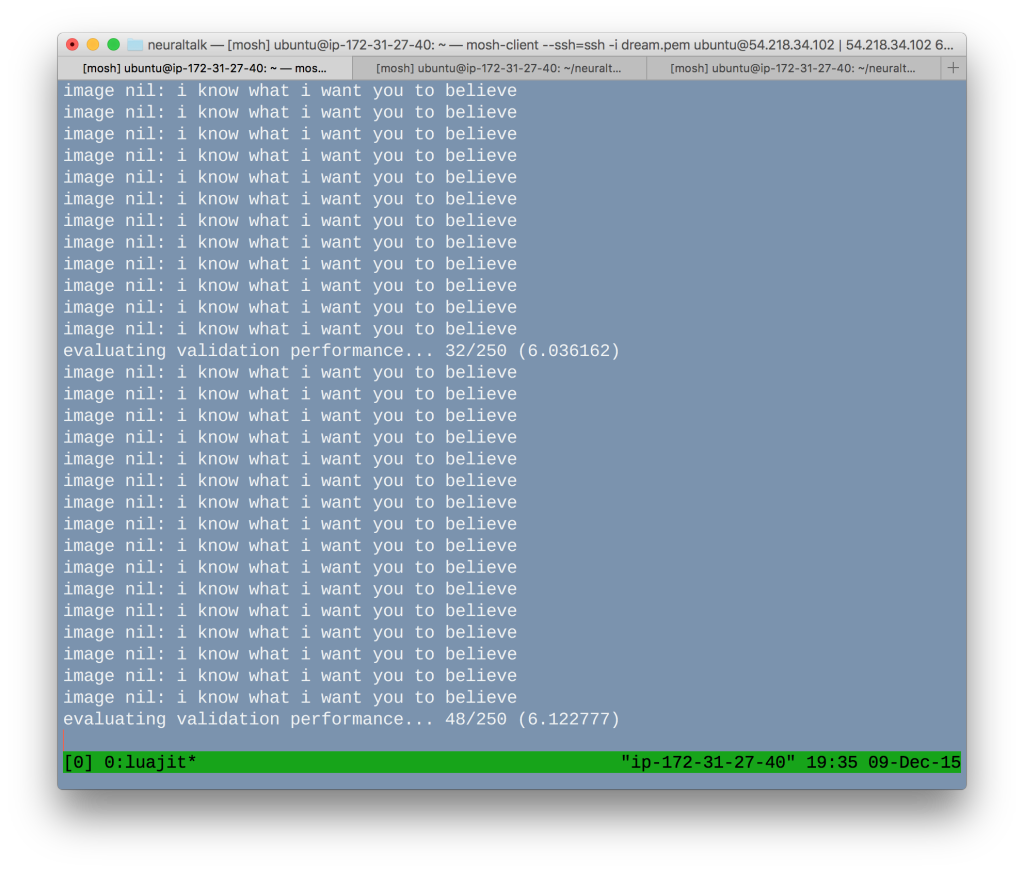

The training process has involved a lot of trial and error. The learning process seems to just halt sometimes, and the machine often wants to issue the same caption for every image.

Rather than training the machine with an image caption set, I trained it with dialogue from subtitles and matching frames extracted at 10 second intervals from every episode of The X-Files. This is just an experiment, and I’m not expecting stellar results.

That said, the robot is already spitting out some pretty weird and genuinely creepy lines. I can’t wait until I have a version that’s trained well enough to feed in new images and get varied results.