For my final projects in Conversation and Computation with Lauren McCarthy and This Is The Remix with Roopa Vasudevan, I iterated on my word.camera project. I added a few new features to the web application, including a private API that I used to enable the creation of a physical version of word.camera inside a Mamiya C33 TLR.

The current version of the code remains open source and available on GitHub, and the project continues to receive positive mentions in the press.

On April 19, I announced two new features for word.camera via the TinyLetter email newsletter I advertised on the site.

Hello,

Thank you for subscribing to this newsletter, wherein I will provide occasional updates regarding my project, word.camera.

I wanted to let you know about two new features I added to the site in the past week:

word.camera/albums You can now generate ebooks (DRM-free ePub format) from sets of lexographs.

word.camera/postcards You can support word.camera by sending a lexograph as a postcard, anywhere in the world for $5. I am currently a graduate student, and proceeds will help cover the cost of maintaining this web application as a free, open source project.

Also:

word.camera/a/XwP59n1zR A lexograph album containing some of the best results I’ve gotten so far with the camera on my phone.

1, 2, 3 A few random lexographs I did not make that were popular on social media.

Best,

Ross Goodwin

rossgoodwin.com

word.camera

Next, I set to work on the physical version. I decided to use a technique I developed on another project earlier in the semester to create word.camera epitaphs composed of highly relevant paragraphs from novels. To ensure fair use of copyrighted materials, I determined that all of this additional data would be processed locally on the physical camera.

I developed a collection of data from a combination of novels that are considered classics and those I personally enjoyed, and I included only paragraphs over 99 characters in length. In total, the collection contains 7,113,809 words from 48 books.

Below is an infographic showing all the books used in my corpus, and their relative included word counts (click on it for the full-size image).

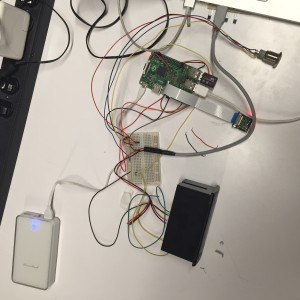

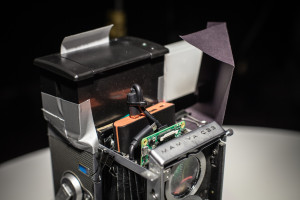

To build the physical version of word.camera, I purchased the following materials:

- Raspberry Pi 2 board

- Raspberry Pi camera module

- Two (2) 10,000 mAh batteries

- Thermal receipt printer

- 40 female-to-male jumper wires

- Three (3) extra-small prototyping perf boards

- LED button

After some tinkering, I was able to put together the arrangement pictured below, which could print raw word.camera output on the receipt printer.

I thought for a long time about the type of case I wanted to put the camera in. My original idea was a photobooth, but I felt that a portable camera—along the lines of Matt Richardson’s Descriptive Camera—might take better advantage of the Raspberry Pi’s small footprint.

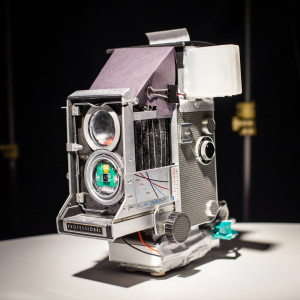

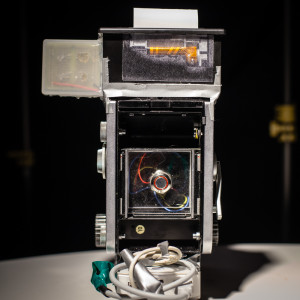

Rather than fabricating my own case, I determined that an antique film camera might provide a familiar exterior to draw in people not familiar with the project. (And I was creating it for a remix-themed class, after all.) So I purchased a lot of three broken TLR film cameras on eBay, and the Mamiya C33 was in the best condition of all of them, so I gutted it. (N.B. I’m an antique camera enthusiast—I own a working version of the C33’s predecessor, the C2—and, despite its broken condition, cutting open the bellows of the C33 felt sacrilegious.)

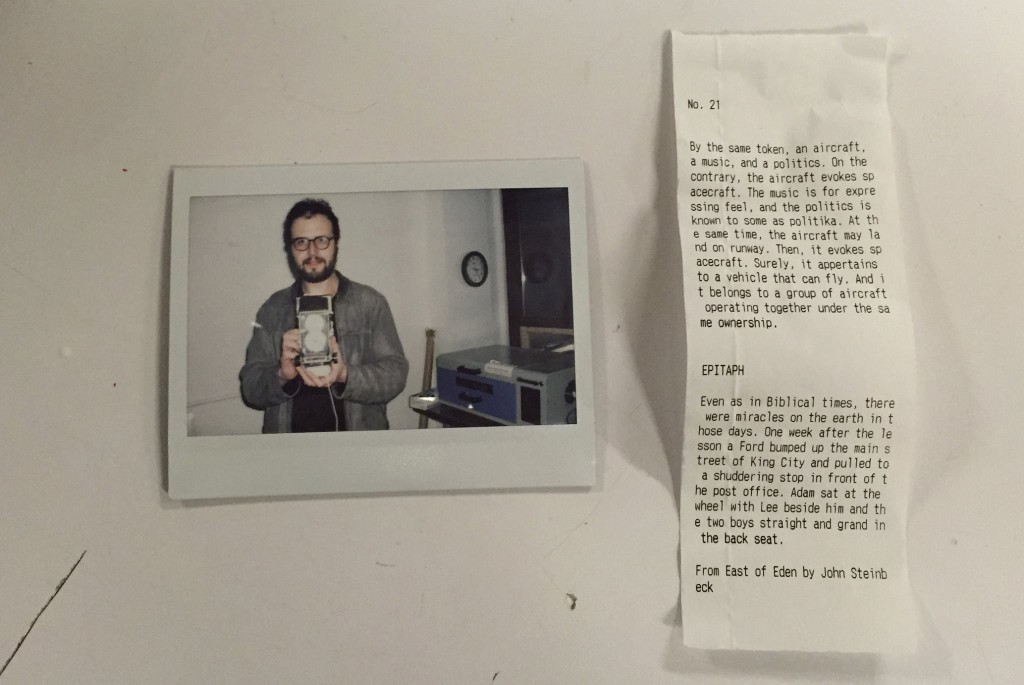

I laser cut some clear acrylic I had left over from the traveler’s lamp project to fill the lens holes and mount the LED button on the back of the camera. Here are some photos of the finished product:

And here is the code that’s running on the Raspberry Pi (the crux of the matching algorithm is on line 90):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 |

import uuid import picamera import RPi.GPIO as GPIO import requests from time import sleep import os import json from Adafruit_Thermal import * from alchemykey import apikey import time # SHUTTER COUNT / startNo GLOBAL startNo = 0 # Init Printer printer = Adafruit_Thermal("/dev/ttyAMA0", 19200, timeout=5) printer.setSize('S') printer.justify('L') printer.setLineHeight(36) # Init Camera camera = picamera.PiCamera() # Init GPIO GPIO.setmode(GPIO.BCM) # Working Dir cwd = '/home/pi/tlr' # Init Button Pin GPIO.setup(21, GPIO.IN, pull_up_down=GPIO.PUD_UP) # Init LED Pin GPIO.setup(20, GPIO.OUT) # Init Flash Pin GPIO.setup(16, GPIO.OUT) # LED and Flash Off GPIO.output(20, False) GPIO.output(16, False) # Load lit list lit = json.load( open(cwd+'/lit.json', 'r') ) def blink(n): for _ in range(n): GPIO.output(20, True) sleep(0.2) GPIO.output(20, False) sleep(0.2) def takePhoto(): fn = str(int(time.time()))+'.jpg' # TODO: Change to timestamp hash fp = cwd+'/img/'+fn GPIO.output(16, True) camera.capture(fp) GPIO.output(16, False) return fp def getText(imgPath): endPt = 'https://word.camera/img' payload = {'Script': 'Yes'} files = {'file': open(imgPath, 'rb')} response = requests.post(endPt, data=payload, files=files) return response.text def alchemy(text): endpt = "http://access.alchemyapi.com/calls/text/TextGetRankedConcepts" payload = {"apikey": apikey, "text": text, "outputMode": "json", "showSourceText": 0, "knowledgeGraph": 1, "maxRetrieve": 500} headers = {'content-type': 'application/x-www-form-urlencoded'} r = requests.post(endpt, data=payload, headers=headers) return r.json() def findIntersection(testDict): returnText = "" returnTitle = "" returnAuthor = "" recordInter = set(testDict.keys()) relRecord = 0.0 for doc in lit: inter = set(doc['concepts'].keys()) & set(testDict.keys()) if inter: relSum = sum([doc['concepts'][tag]+testDict[tag] for tag in inter]) if relSum > relRecord: relRecord = relSum recordInter = inter returnText = doc['text'] returnTitle = doc['title'] returnAuthor = doc['author'] doc = { 'text': returnText, 'title': returnTitle, 'author': returnAuthor, 'inter': recordInter, 'record': relRecord } return doc def puncReplace(text): replaceDict = { '—': '---', '–': '--', '‘': "\'", '’': "\'", '“': '\"', '”': '\"', '´': "\'", 'ë': 'e', 'ñ': 'n' } for key in replaceDict: text = text.replace(key, replaceDict[key]) return text blink(5) while 1: input_state = GPIO.input(21) if not input_state: GPIO.output(20, True) try: # Get Word.Camera Output print "GETTING TEXT FROM WORD.CAMERA..." wcText = getText(takePhoto()) blink(3) GPIO.output(20, True) print "...GOT TEXT" # Print # print "PRINTING PRIMARY" # startNo += 1 # printer.println("No. %i\n\n\n%s" % (startNo, wcText)) # Get Alchemy Data print "GETTING ALCHEMY DATA..." data = alchemy(wcText) tagRelDict = {concept['text']:float(concept['relevance']) for concept in data['concepts']} blink(3) GPIO.output(20, True) print "...GOT DATA" # Make Match print "FINDING MATCH..." interDoc = findIntersection(tagRelDict) print interDoc interText = puncReplace(interDoc['text'].encode('ascii', 'xmlcharrefreplace')) interTitle = puncReplace(interDoc['title'].encode('ascii', 'xmlcharrefreplace')) interAuthor = puncReplace(interDoc['author'].encode('ascii', 'xmlcharrefreplace')) blink(3) GPIO.output(20, True) print "...FOUND" grafList = [p for p in wcText.split('\n') if p] # Choose primary paragraph primaryText = min(grafList, key=lambda x: x.count('#')) url = 'word.camera/i/' + grafList[-1].strip().replace('#', '') # Print print "PRINTING..." startNo += 1 printStr = "No. %i\n\n\n%s\n\n%s\n\n\n\nEPITAPH\n\n%s\n\nFrom %s by %s" % (startNo, primaryText, url, interText, interTitle, interAuthor) printer.println(printStr) except: print "SOMETHING BROKE" blink(15) GPIO.output(20, False) |

Thanks to a transistor pulsing circuit that keeps the printer’s battery awake, and some code that automatically tethers the Raspberry Pi to my iPhone, the Fiction Camera is fully portable. I’ve been walking around Brooklyn and Manhattan over the past week making lexographs—the device is definitely a conversation starter. As a street photographer, I’ve noticed that people seem to be more comfortable having their photograph taken with it than with a standard camera, possibly because the visual image (and whether they look alright in it) is far less important.

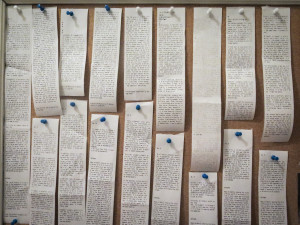

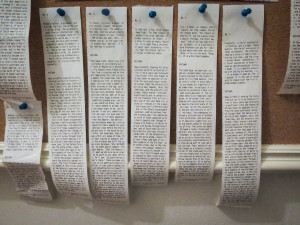

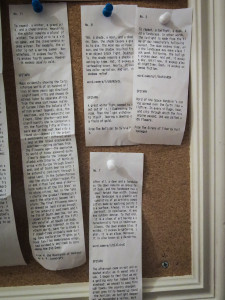

As a result of these wanderings, I’ve accrued quite a large number of lexograph receipts. Earlier iterations of the receipt design contained longer versions of the word.camera output. Eventually, I settled on a version that contains a number (indicating how many lexographs have been taken since the device was last turned on), one paragraph of word.camera output, a URL to the word.camera page containing the photo + complete output, and a single high-relevance paragraph from a novel.

I also demonstrated the camera at ConvoHack, our final presentation event for Conversation and Computation, which took place at Babycastles gallery, and passed out over 50 lexograph receipts that evening alone.

Photographs by Karam Byun

Often, when photographing a person, the camera will output a passage from a novel featuring a character description that subjects seem to relate to. Many people have told me the results have qualities that remind them of horoscopes.